Thinking strategically about connectedness in communication and learning using the Mediated Interaction Framework

Frameworks imperfectly explain the world, but they’re also invaluable – particularly when evaluating decisions that will affect the results you desire.

From an executive perspective, those results tend to fall into four categories: top line, bottom line, risk, and time-to-result.

Even in non-profit or government environments that may not use the same language (e.g., you don’t “make sales”), the essential categories of productivity, stewardship of resources, mitigating risk, and time-to-result govern (or should) a leader’s or strategist’s perspective.

And as we get rolling, one warning: this is a long form post wherein we’ll look at

- The organizational dynamics that drove me to create this explanatory framework to begin with (including an example story of a headscratcher decision)

- Determining the value of data, why orgs often only give it lip service, and what to ask

- Using the framework: questions to ask when strategizing content (particularly learning and development content)

Why the Mediated Interaction Framework came to be (and why it’s important)

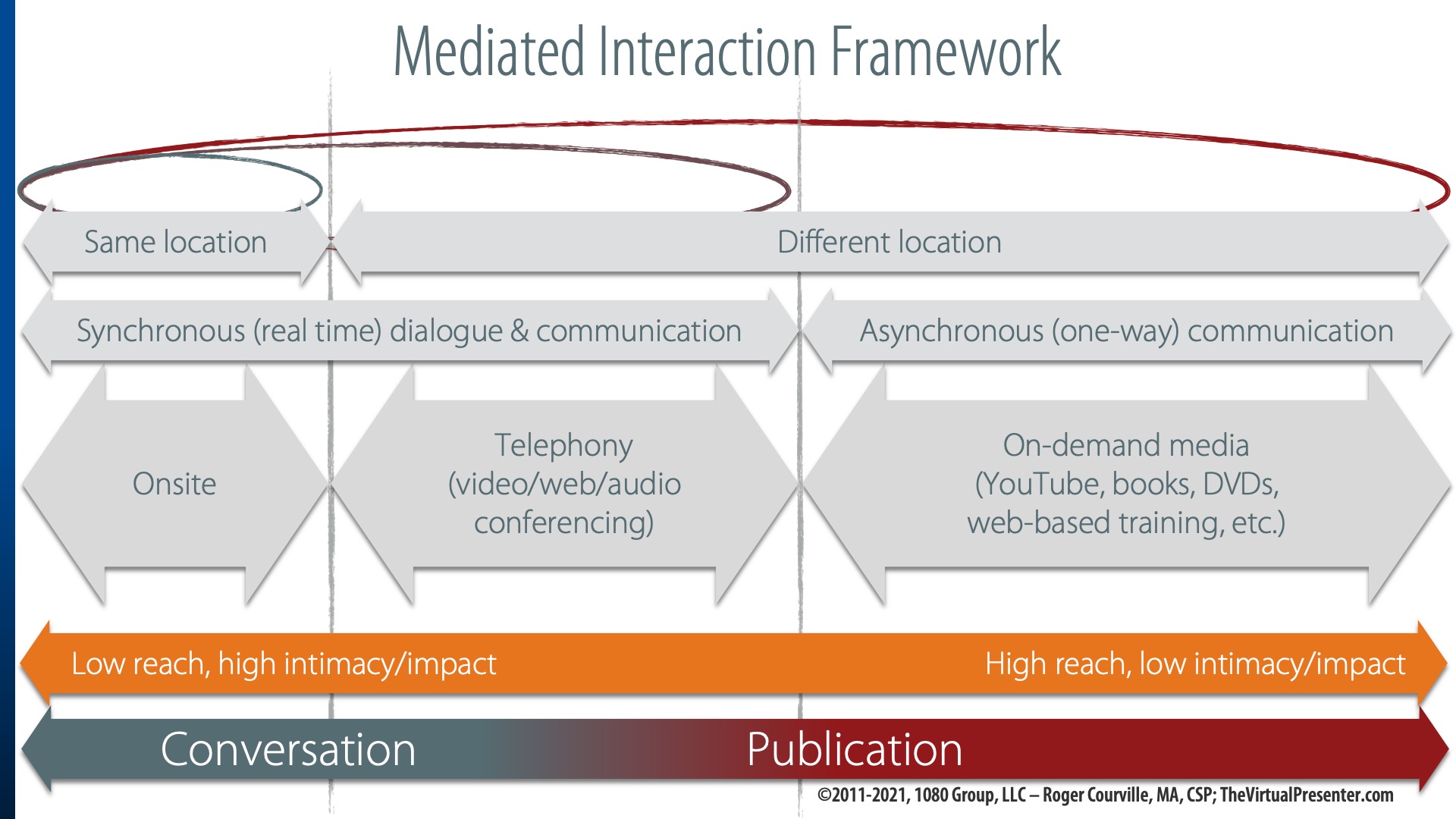

The Mediated Interaction Framework attempts to help communication and learning strategists consider the nature of interpersonal connectedness relative to tradeoffs and decisions about the media through which communication/learning happen.

I developed it after repeatedly interacting with people stuck working in organizations with overly simplistic learning strategies related to virtual classes, events, and webinars. And it’ll take some space in the post, but here’s an example by way of story:

The setting: a workforce-learning conference where I was speaking a few years ago. Sitting down at a table, a gal saw the “presenter” tag on my badge and asked what I was speaking about (which was thinking strategically in designing virtual classrooms into an overall learning ecosystem).

Her quick reply: “Oh, good. I know one session I don’t need to go to.”

I inquired as to why.

It turns out that she worked for a global footwear brand (of swooshy persuasion), and she told me that the mandate from on high was to move all training to on-demand media. I clarified, “All of it? No synchronous, instructor-led training of any sort, online or onsite?” Nope.

As you might imagine, I asked additional clarification questions. Their simple (simplistic) reason — a reason you see illuminated in the framework – came down reach and cost (the far right). In their case this like would have been evaluated as “lowest cost per unit of knowledge delivered.”

The point here isn’t to throw Swooshy Shoes under the bus. It’s to point out an all-too-common example: they were “buying on price” instead of effectiveness.

To be sure, on-demand media sometimes is the right choice, but she assured me this had not been evaluated relative to effectiveness…it was a cost-driven decision. And she agreed that the overall cost to the organization wasn’t part of the discussion (read: one department maximized their spend at the expense of greater overall organization cost).

The value of data… and the challenge of being data-driven

Besides intra-organizational turf wars, why do orgs fall into this trap? Not following the data.

Usually because they don’t have it, albeit for good reasons – reasons you should be able to illuminate.

Everyone loves the term ROI (return on investment), but few actually invest in calculating it at a level granular enough to guide operational decisions.

To explain why, let’s ‘bottom line’ this thing with a single principle:

Optimization drives valuation.

In other words, to use the failed “swooshy shoes” example as a case, we’d want to have explored the results delivered from ILT/vILT relative to (meaning compared to) the results from on-demand media, and those in relationship to their respective costs.

The premise of optimization is that every medium has a right time and place wherein a given medium of communication is the best possible choice.

By analogy, you can tell a story in a book or a movie, but the discipline and cost (and a bunch of other things) are very different for producing them. And while someone you know will argue that books are better than movies (or vice versa), the point is that they’re different…some things you can deliver much better in a book than a movie and vice versa.This is true for tradeoffs to be evaluated in choosing media as part of learning and communication strategy.

And therein lies the challenge:

Technically anything can be isolated, measured, and monetized (how you’d actually get to calculating ROI).

But gathering data itself is itself an expense. <– Read that again.

This leads to the primary reason why most orgs don’t actually have fully data-driven optimization:

- The value of data is NOT (fully) that it helps you make better decisions (e.g., see the executive metrics at the beginning of this post).

- The value of data IS the delta between your improved decision (“X”) versus your less-informed decision (“Y”) that is then weighed against the cost of acquiring that data (“Z”).

- The value of data: X-Y/Z.

This means most orgs, like individuals in their daily lives, don’t make fully-decisions according to the data. They exercise judgment and intuition.

Which is where a framework comes in…to at least think through the gut checks in terms of principles and tradeoffs versus overly-simplistic numbers (like cost alone, in the case of some division inside the swooshy shoe company).

One principle that’s easy to communicate to others: optimization drives valuation.

Using the framework: understanding the categories

Again, the Media Framework attempts to help communication and learning strategists consider the nature of interpersonal connectedness relative to tradeoffs and decisions about the media through which communication/learning happen.

For me, given that my expertise was first in understanding the theory of transactional distance and medium theory in light of real-time-but-distant communication (e.g., webinars, vILT, virtual keynotes, etc.), the problem to resolve was the disparity between a) most webinars (if not virtual classes) being “talk AT you” experiences when b) the research on androgogy (theory of adult learning) points to the need to have participants be just that – participating, actively, not passively.

I’m not the first to suggest a framework suggesting place and time. A typical 4-box grid wherein the X-Y axes are place and time, and these are distinguished by “same” and “different” (e.g., place can be same or different as can time).

But such a frame yields two problems. One, users always look at the “same place, different time” box and wonder how it fits (the media form would be something like a self-directed kiosk). Two, it doesn’t instantly illuminate the tradeoff of intimacy/impact vs reach.

Perhaps most importantly, what I’ve found over teaching this to thousands of people is that they need something they can repeat in shorthand.

That became “conversation versus publication.”

To be certain, you could stand in an in-person setting and deliver the equivalent of “publication.” That’d be a lecture with no Q&A.

At the other end, publication on something like YouTube or Facebook or an LMS may involve asynchronous interaction at a rapid enough clip so that it’d would be, at least for brief periods of time, synchronous. This would be like you trading a series of text messages with someone – you might focus your attention for some period of time (you’re communicating semi-synchronously or even synchronously), but then you put it down only to come back to it 15 minutes later.

The takeaway: The question I always lay on the table is, “What is the best part of human connectedness, and how do we bring the best of that to our learners or audience?”

And just so we’re clear: that’s pretty much “never” when it comes to infobarfing over PowerPoint.

Using the framework: asking questions

All of this leads to the importance of thinking more critically (if not strategically) when planning and designing. What follows is not a system or method, but simply questions that follow from the above observations that may serve as prompts when thinking through anything from simple (i.e., delivering a live webinar) to the complex (i.e., a multi-part, multi-media learning program).

What’s the value (to us) of human interaction (if not connectedness)?

One of many potential examples, but perhaps the most poignant offender, in asking this of people knowing that they’ll say, “High!” The idea of then quantifying the value versus additional expense will nearly always be foreign, but you can then prompt discussion of what it might be, even if it’s a gut check. If you don’t, it’s about as useful as asking if someone would rather drink a $150 bottle of wine or a $10 bottle.

What’s the value of ensuring every participant has the same experience?

Here are two examples of potentially many. Perhaps the worst offender is hybrid onsite/online audiences (driven by someone’s desire to not present online) – the online audience has a totally different psychosocial experience. A less obvious example would be a webinar or virtual class that includes some participants on mobile devices (with small screens, possibly with less or less usable features).

Does it need to be real time (synchronous)?

Example: If you’re going to deliver a live, virtual presentation, but the audience experience is passive (akin to watching a YouTube video) why do it live? You’re asking a higher ticket price from the attendee (show up at 11am on Tuesday) for something that could possibly be at a more convenient time (they can watch when they want to) or even better user experience (fast forward or rewind buttons).

What’s the rationale for a higher-cost investment in a richer media form?

This may be viewed on the framework either horizontally or vertically. A horizontal example might be the live-vs-on-demand example in the previous question. Another might be “Why do an in-person class when one online will work?” Vertical examples might be something like, “Why produce an on-demand video when a simple audio recording will work?” (in the ‘on demand’ section to the right). Or it might be “Does the presenter really need a PowerPoint deck?” (for the “in person” section on the left).

What’s the rationale for doing this in one session versus multiple sessions?

Why is a webinar typically one hour long? Or if you move an all-day training online, might you better take advantage of spaced repetition (if not make it easier on the learners) by splitting it up?

The bottom line

The above queries are not, by any stretch, all the questions you might ask. The point is to start asking questions.

And because you’ll inevitably bubble up issues for which there is no data, inference to the best explanation may be what you need to rely upon. In other words, this doesn’t mean there isn’t some form of research or evidence that might inform the decision – the question is whether or not you can form an argument to support your position.*

Importantly, this also is where you’ll run into organizational politics. Because everyone’s got an opinion and thinks their gut-check is valid.

Maybe. Maybe not.

But hopefully you’ll be better prepared to ask questions that lead to better strategy and design for your communications and training programs, onsite or online, real time or on demand.

Roger

*For example, I’ve often answered the question, “When delivering a webinar, should I speak at the same rate of speech as normal, or at a higher or lower rate of speech?” There is no data, but there are at least two studies that looked at rate of speech (one for college debate teams, another for efficacy of inside/telephone sales). From here I present an abductive reason that “if this is true in these studies and in these circumstances, I posit that you should…. (not the point of this post! <snicker>).